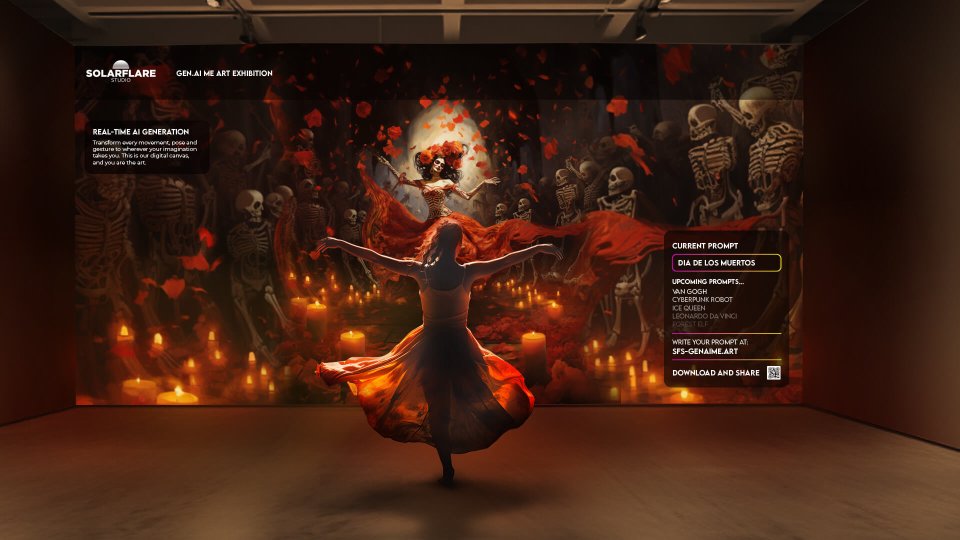

As 2024 unfolds, the Solarflare team are eager to explore the transformative era in experiential design, driven by the rapid advancements in real-time AI.

Current State of Play

Our journey in technology has been marked by significant strides, particularly in the realm of high-quality image generation. Tools like Stable Diffusion, Runway, Midjourney, and DALL-E have broadened the creative horizon. Traditionally, these tools cater to offline processes, requiring significant rendering time. In exhibitions and galleries, for instance, data visualisation has become increasingly sophisticated, yet such experiences don’t tend to include the same method of prompting and then instantly generating images and videos.

The Next Step Forward IN AI

This year is set to be a turning point with the introduction of technologies like StreamDiffusion and other systems that offer advancements aiming to revolutionise the process of frame-by-frame image generation, making it more responsive and adaptive. The key here is cracking the hurdles of image generation and the latency of the content being created. We’ve got a vision of how these systems can be integrated within experiential activations, drawing users into a more engaged brand experience.

User Experience in Real-Time

Imagine an interactive space where each movement you make transforms into an artistic expression. The potential of real-time AI is boundless. It allows for altering visual narratives based on user movements, instantly changing prompts or styles. Users can even directly input prompts and styles or manipulate videos on screens by integrating sound, motion, and prompting, creating a deeply personalised interactive experience. Essentially, the audience will be able to generate in real-time a piece of art however they like – making this a perfect way for brands to push creativity and technical advancements.

Want to explore the possibilities of new technologies? Get in touch to explore ideas.

Striking a Balance Between Potential and Limitations

Incorporating real-time AI into experiential design brings immense possibilities alongside significant challenges. The most notable hurdle is the processing power required for technologies like Stable Diffusion to operate in real time. Stable Diffusion, particularly in a real-time context, demands robust computing resources to minimise latency, ensuring that the generated images are not just responsive but also of high quality. The hardware requirements for such an operation typically include high-end GPUs and dedicated processing units, which might limit accessibility and scalability in certain environments. However, with the correct setup put into systems, such as live Audio-visual systems like TouchDesigner or Notch, we’ll be able to manipulate content in a number of exciting ways, enabling surfaces to be projected on with the video content that users generate or LED screens to illuminate.

Despite these challenges, the push towards real-time AI remains a key focus, promising a future where experiential design is not only interactive but also instantaneously responsive and dynamically creative.

Feel free to check out our exploration of AI’s Role in Sound and Visual Design here, which uses Stable Diffusion and TouchDesigner to create an audio-reactive visual.

Solarflare’s Vision

We’re excited to be at the helm of this revolution. Our pipelines are being adapted for both brand activations and art exhibitions, maximising the potential of advanced computer graphics and real-time processing.

The team’s trials with these technologies have been illuminating, revealing the potential of merging real-time AI with sophisticated graphics to create captivating experiences. As we continue doing so, we invite you to join us in exploring the vast potential of real-time AI in experiential design, pushing the limits of what’s possible in this vibrant and evolving field.