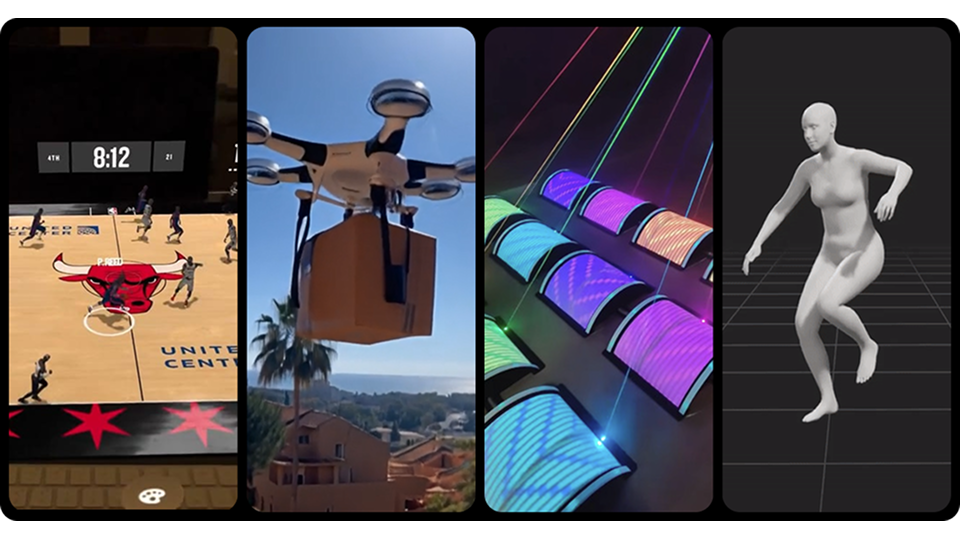

Emerging innovations in AI tracking and face-swapping are transforming interactive content. This month, real-time motion tracking gets a major boost with TouchDesigner’s new OpenPose plugin, while DeepFaceLab’s latest update makes AI face-swaps smoother and more realistic than ever.

Each month, we highlight the latest technological advancements alongside creative experiments that inspire us. This includes new AI tools, XR applications, and interactive experiences that can drive brand activations and immersive events. Our goal is to showcase innovations that are relevant for brands looking to push creative boundaries.

Here’s what’s new, how it’s shaping interactive experiences, and where these tools can be applied.

🌀 Immersive Tech (AR, VR & XR)

1 - NBA Tabletop Mode for Vision Pro – AR Basketball Viewing

What’s new?

NBA League Pass has introduced a feature that allows live basketball games to be viewed on a tabletop in AR using Apple Vision Pro, offering a more interactive way to engage with sports content.

Why it matters?

Sports broadcasts are evolving, and this is a perfect example of how AR can enhance engagement. Real-time stats, alternate camera angles, and virtual overlays give fans control over how they experience the game. We see this as a step towards more interactive, data-rich sports viewing that could easily extend to brand activations and sponsorships.

Where this is useful:

AR-enhanced sports broadcasting

Fan engagement with customisable viewing experiences

Branded digital activations for live events

Video Credit: Todd Moyer

2 - Capturing Locations for AR Exploration

What’s new?

A recent project showcased how real-world locations can be scanned and brought into AR, allowing users to explore detailed 3D scenes in augmented space.

Why it matters?

We see this as a powerful way to bring physical spaces into digital activations. Capturing locations in 3D and integrating them into AR offers endless possibilities for virtual tourism, branded experiences, and immersive storytelling. We’ve explored similar techniques in our F1 project, where we scanned the Baku pit garage and created a portal-based AR experience that allowed users to step inside and interact with the environment.

Applications:

AR-based location exploration and storytelling

Virtual tourism and event previews

Branded activations using real-world spaces

Video Credit: Ian Curtis

3 - Vuforia Engine 11 – Advanced AR Features for Enhanced Brand Experiences

What’s new? Vuforia Engine 11 provides improved object tracking, spatial navigation, and cloud-based AR for dependable, scalable solutions.

Why it matters? We see this as a gateway to more reliable interactive experiences. Spatial navigation supports features like wayfinding and scavenger hunts, blending practicality with playful AR engagement.

Applications:

Robust AR apps for product demos

Interactive retail displays with precise tracking

Scavenger hunts and guided tours

Video Credit: Vuforia

🎨 Generative AI & Content Creation

4 - Pika AI – Two Major Releases in February 2025

What’s new? Pika AI has had an exciting month with two major updates:

Pika 2.2 introduces Pikaframes, a keyframe transition system enabling smooth video transitions from 1 to 10 seconds. It also supports up to 10 seconds of 1080p resolution video, offering more creative control over AI-generated sequences.

Real-time object and people insertion allows users to seamlessly add new elements into videos without traditional CGI, making AI-driven content creation more dynamic and accessible.

Why it matters? These updates take AI-generated video content to the next level. Pikaframes provides more cinematic and professional storytelling, while the real-time object insertion feature unlocks new possibilities for interactive marketing, VFX, and personalised branding. This makes high-quality video production more efficient and accessible for brands.

Where this is useful:

AI-assisted video production with greater control

High-quality social media and brand storytelling

Personalised and interactive marketing campaigns

Video: Pika Real-time Object Insertion – our experiments

Video: PikaFrames

5 - FLORA – AI-Powered Storytelling & Shot Planning

What’s new? FLORA is a new node-based AI canvas designed for filmmakers. It doesn’t just generate visuals—it analyzes stories and suggests shots based on structure and intent.

Why it matters? By breaking down story beats, suggesting shot ideas, and improving iteration speed, FLORA helps filmmakers and brands create purposeful content while retaining creative control.

Where this is useful:

- AI-assisted storyboarding & shot planning

- Faster iteration for film & branded content

- Structured AI-driven narrative creation

6 - Microsoft Muse – AI-Generated Gameplay from a Single Image

What’s new? Microsoft Muse has introduced a groundbreaking AI model capable of generating entire gameplay sequences from a single image, leveraging real multiplayer game data to build immersive, dynamic experiences.

Why it matters? We see this as a huge step forward in AI-assisted game creation. Imagine brands launching interactive experiences with minimal development time—Muse makes that possible. Whether for promotional gaming experiences, branded storytelling, or interactive retail activations, this technology opens new doors for engagement.

Where this is useful:

-

AI-assisted game prototyping for marketing campaigns

-

Interactive brand storytelling through gaming

-

Rapid content creation for virtual experiences

Learn more about Muse

⚙️ Immersive Tools & Real-Time Interaction

7 - TouchDesigner MediaPipe Plugin – Real-Time AI Face & Pose Tracking

What’s new? The latest MediaPipe plugin for TouchDesigner introduces OpenPose rendering, supporting real-time face and pose tracking for Stream Diffusion.

Why it matters? This is a great addition to our tech stack for real-time visual experiences. Whether it’s live events, performances, or interactive installations, brands can now create AI-powered visuals that react instantly to human movement, adding a new layer of engagement.

Where this is useful:

- Brand activations with interactive visuals

- AI-generated visuals for live events

- Real-time reactive content for immersive exhibitions

Video Credit: @blankensmithing

8 - Wemade x NVIDIA ACE AI Boss – Dynamic AI-Powered NPCs

What’s new? Wemade unveils “Asterion,” an AI-powered MMORPG boss that evolves dynamically based on player interactions, using NVIDIA ACE technology.

Why it matters? We love how AI-driven NPCs can create more organic, unpredictable experiences in gaming. Beyond that, AI-powered interactions could also enhance brand storytelling and interactive retail, where digital assistants or virtual brand ambassadors adapt to user behaviour in real time.

Where this is useful:

-

AI-driven character behaviour in gaming & storytelling

-

Interactive digital brand ambassadors

-

Virtual assistants & real-time customer engagement

Video Credit: nvidia

🌐 Interactive Installations & Displays

9 - Weaving Light Tapestry – Laser & Projection Art Installation

What’s new? This installation explores how lasers and projection mapping can be used to weave light into complex, interactive compositions. The interplay of layered visuals and structured lighting techniques creates a rich, multidimensional experience that feels almost tangible. The Weaving Light Tapestry blends digital artistry with physical space, creating a dynamic and immersive experience.

Why it matters? We find this kind of approach exciting because it showcases how light can be used as a design element, not just for spectacle but as a medium for immersive storytelling. The fusion of digital and physical elements opens up fresh possibilities for retail displays, live events, and brand activations. This is a great example of how light-based installations can transform event spaces and retail activations.

Where this is useful:

- Large-scale brand activations

- Retail store experiences

- Interactive art installations

Video Credit: Todd Moyer

10 - Muxwave – Interactive LED Gateway

What’s new? The LANG UK stand features an eye-catching interactive LED gateway built using Muxwave technology. This installation blends large-scale visuals with cutting-edge LED display techniques to create a fully immersive experience.

Why it matters? We love how this pushes the boundaries of digital displays, offering brands a way to create striking, high-impact installations for events, retail spaces, and brand activations.

Where this is useful:

- High-end retail displays

- Experiential marketing activations

- Large-scale event and trade show installations

Video Credit: LANG UK

🔧 Hardware & Tools

11 - Meta Aria Gen 2 – Smart Glasses for AI & XR Research

What’s new? Meta has introduced Aria Gen 2, the latest version of its experimental smart glasses designed for AI, XR, and robotics research. Featuring an advanced sensor suite—including an RGB camera, 6DOF SLAM, eye tracking, microphones, and biometric sensors—these glasses push forward the possibilities of hands-free, context-aware computing.

Why it matters? We’ve already received briefs for glasses-based experiences, and it’s clear that 2025 will see further momentum in this space. Whether for AI-assisted interactions, immersive retail applications, or real-time data overlays, smart glasses will be a critical component of future brand activations.

Where this is useful:

-

AI-powered real-world overlays for retail & navigation

-

Hands-free data access for industrial & creative workflows

-

Next-gen XR experiences for events & brand activations

Learn more about Meta Aria

🧪 Research & Development

12 - Meta for Education – Bringing Quest to Schools and Universities

What’s new? Meta’s initiative integrates Quest VR headsets into educational settings, supplying device management and an array of immersive apps.

Why it matters? We find VR to be a versatile tool for training, workshops, and collaborative projects, offering hands-on learning and skill-building in a virtual setting.

Applications:

VR-based corporate training

Immersive lessons for schools

Virtual collaboration and workshops

Learn more about Meta for Education

13 - 3D Gaussian Splatting – AI-Driven City Simulation

What’s new? 3D Gaussian Splatting is an innovative method that allows AI to generate highly detailed and dynamic real-world environments using point-based rendering. By leveraging hierarchical Gaussian splatting, this technique makes it easy to reconstruct entire cityscapes with remarkable realism and efficiency. Combined with procedural tools like Houdini and AI models like NVIDIA Cosmos, it allows for incredibly fluid and interactive experiences.

Why it matters? We love how accessible and scalable this approach is. Instead of relying on complex 3D modeling techniques, Gaussian splatting offers a lightweight way to create realistic environments with minimal processing power. This makes it ideal for large-scale simulations, immersive brand activations, and even real-time driving experiences where users can navigate and interact with AI-generated cityscapes. The ability to pair this with driving simulations or training modules adds an extra layer of engagement and fun.

Where this is useful:

AI-generated cityscapes for virtual events and gaming

Real-time urban simulations for architecture and planning

Driving experiences and immersive brand activations

Video Credit: Janusch Patas

14 - Meshcapade MoCapade 1.0 – Best-in-Class Markerless Motion Capture

What’s new? Meshcapade has launched MoCapade 1.0, delivering best-in-class markerless motion capture. This system refines 3D motion extraction from a single video, representing a major leap in accuracy and usability.

Why it matters? This is a game-changer for motion-driven experiences. Whether for virtual production, digital fashion, or brand activations, removing the need for tracking suits makes high-quality motion capture more accessible and cost-effective for brands looking to create interactive experiences.

Where this is useful:

AI-assisted motion capture for brand activations

Virtual production workflows for advertising & film

AR/VR content creation for immersive campaigns

Video Credit: Meshcapade

15 - Niantic’s Scaniverse – Exploring 3D Gaussian Splatting on Meta Quest

What’s new? Scaniverse supports photorealistic 3D scanning on devices, incorporating Gaussian splatting for real-time exploration.

Why it matters? It elevates virtual tours with highly detailed environments, enabling robust brand activations and e-commerce displays that feel nearly tangible.

Applications:

Real estate or tourism tours

Immersive product showcases

Educational and museum exhibits

Video Credit: Niantic

Curious about what’s possible? From interactive experiences to AI-driven content creation, new technology is opening up fresh opportunities for engagement. Whether you’re looking to experiment or build something groundbreaking, we’d love to explore ideas with you.